Call it edge or call it whatever you want, just don’t give up on your users

Vercel killed the Edge. But what exactly is that they killed?

Vercel made waves on Twitter recently by implying they are killing off the edge:

To be clear, they’re not discontinuing their edge product. But as one of the companies that has been pushing people to the edge the most aggressively, it definitely shows that they do not believe in the concept as much as before.

I wrote some words about it on Twitter myself

In this article I take some time to present that in a more structured way. Without burying the lead, I believe there is considerable confusion about what the edge even is. Some of the perceived disadvantages of the edge are mostly a communication issue.

The other common complaint is that the database is in a single region anyway. This is one of the things Turso excels at, and we will look into an example showing how you can have your API with database access on Cloudflare Workers always 50ms away from your users, anywhere in the world, without breaking the bank.

And to make things even better, we’re also offering new users who want to replicate their database to multiple locations an exclusive discount: Sign up until May 15th and upgrade to Turso's scaler plan for 50% off for the first 3 months, including adding more locations!

#The original sin of the edge.

The edge is a geographical concept. But in the specific implementation of Vercel Edge, there was a catch: Vercel is a Javascript-oriented company, and built their edge product as a serverless offering (not all edge providers are serverless). Vercel uses a Javascript runtime that is optimized for serverless workloads.

This specific runtime brings good things to the table: much better cold starts, better compatibility with browser APIs, etc, but it also has disadvantages. In particular, it is not compatible with Node.js. Even in the announcement where Vercel’s CEO talks about moving out of the Edge, notice what they are moving to: Node.js.

In my humblest opinion, this messaging became (Vercel)’s Edge main issue. A lot of the discourse moved away from the problem the edge is supposed to solve, namely, doing things as close as possible to the user, into the domain of capabilities of the runtime.

#But there’s also the database problem…

The other reason you hear for why “the Edge” is not great, aside from all the issues with the runtime is that your database is far from the code. That one objection is obviously valid: if you have your database in a single location, executing code across the globe will not yield the best performance. But that’s exactly what Turso is designed to solve.

Data replication is around for decades, so the question is: why not just add replicas everywhere? The answer boils down to a couple of factors: data replication is hard and expensive.

#Turso is not hard

Turso implements read-your-writes semantics, meaning that in the same function execution, you never have to worry about writing data, and then immediately seeing data go back in time. It is a relational database, a model everybody is familiar with, and there is no need to ever be aware which replica you are hitting: there is a single URL that routes you to the closest replica automatically.

#Turso is not expensive

Let’s run a test from 10 locations across the globe, sending requests to a Cloudflare Worker. Requests to Cloudflare workers will automatically be routed to the closest location, where our function is executed.

The function is simple, and will query something from a Turso database. For simplicity, each one of the loader regions will fire 10% of the requests.

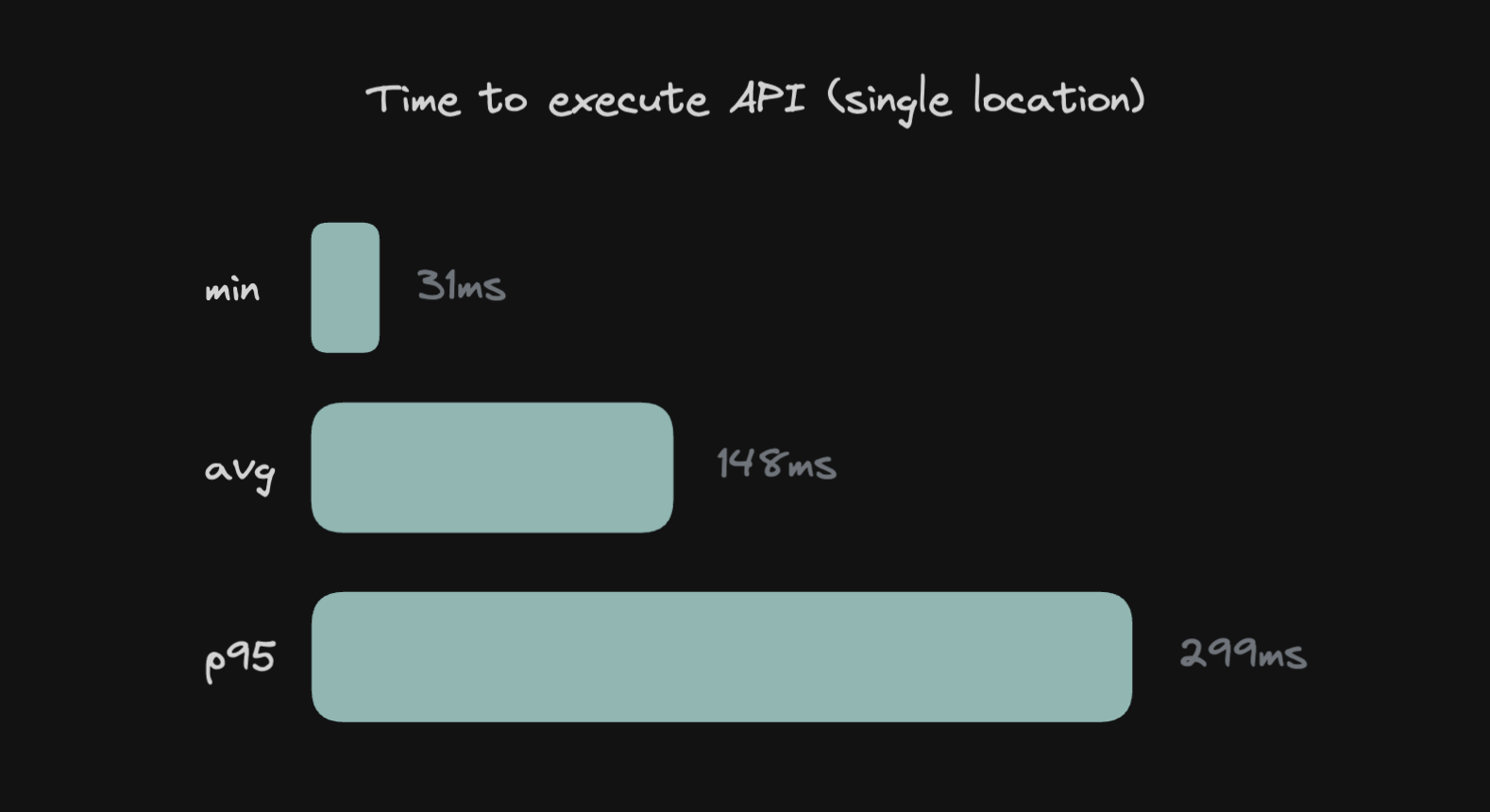

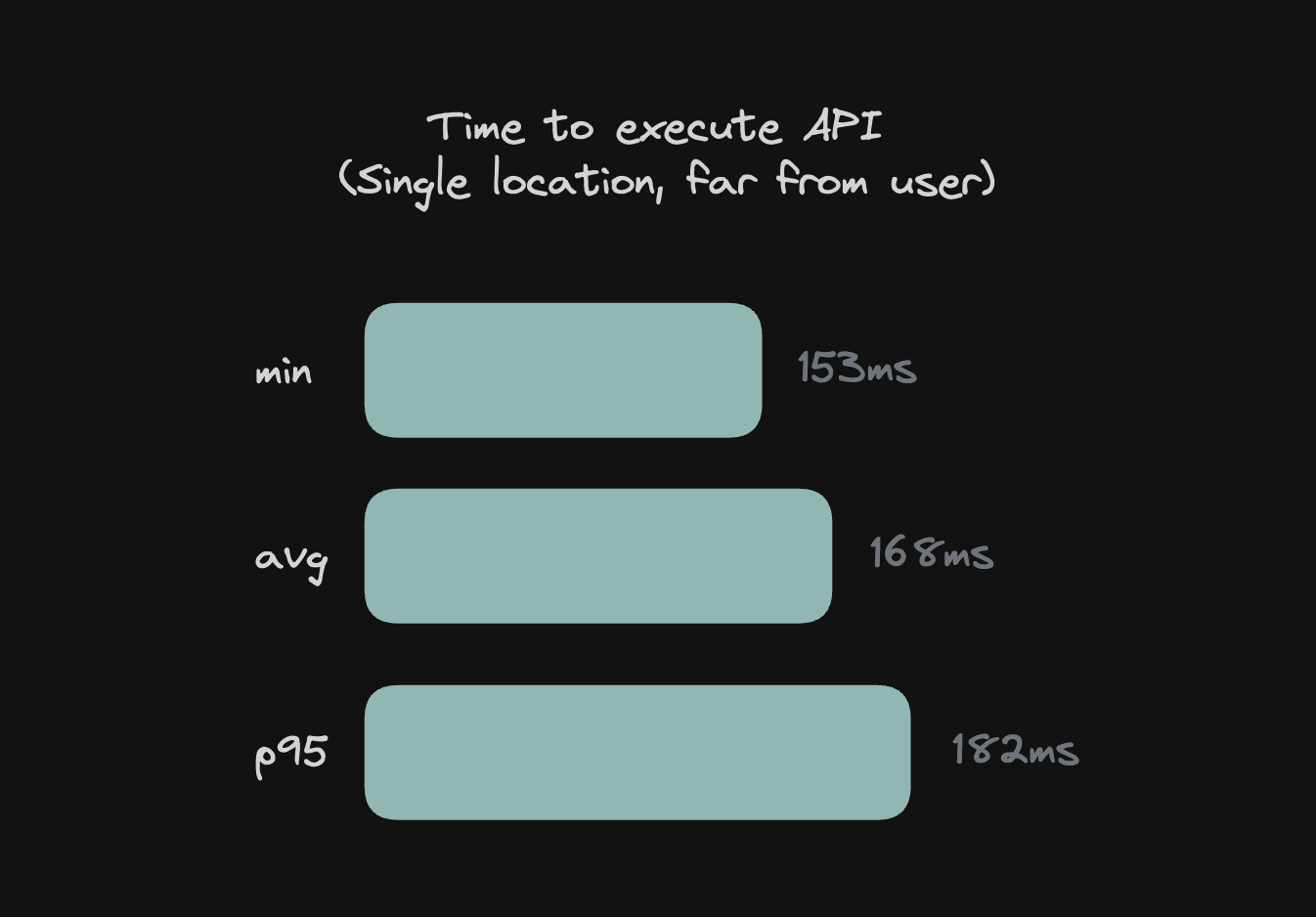

To establish a baseline, let’s look into what happens if the database is indeed in a single location:

148ms is not terrible for the average. But given that the function itself is fairly simple, and has only one roundtrip to the database, we can see that in practice, results would be much worse for complex cases.

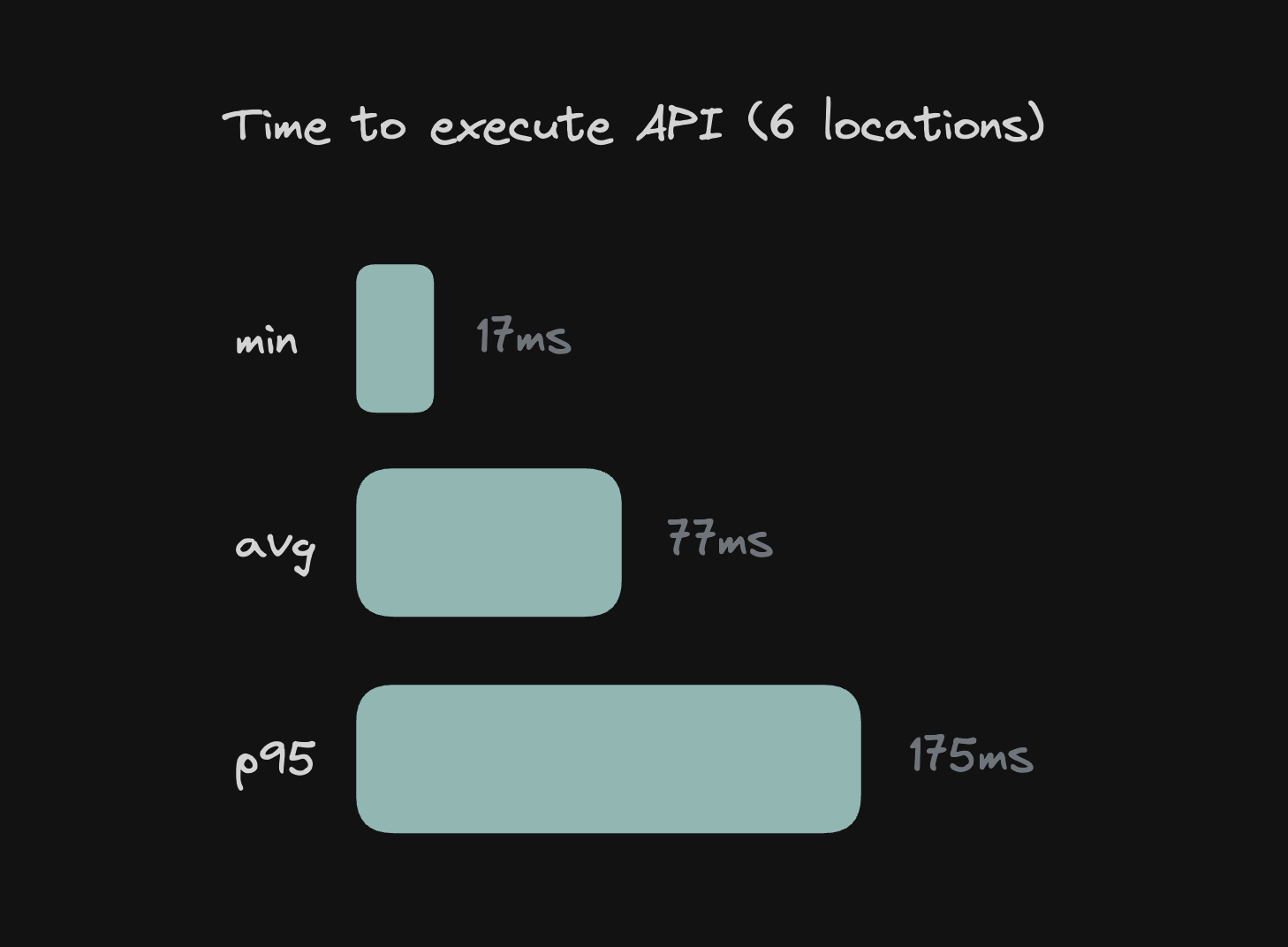

For $29/month, Turso can replicate the data in 6 locations (That's only $14.5/month for the first three months if you sign up here . How does that change the distribution of requests?

It’s clear that things are now much better. The average request is already below 100ms.

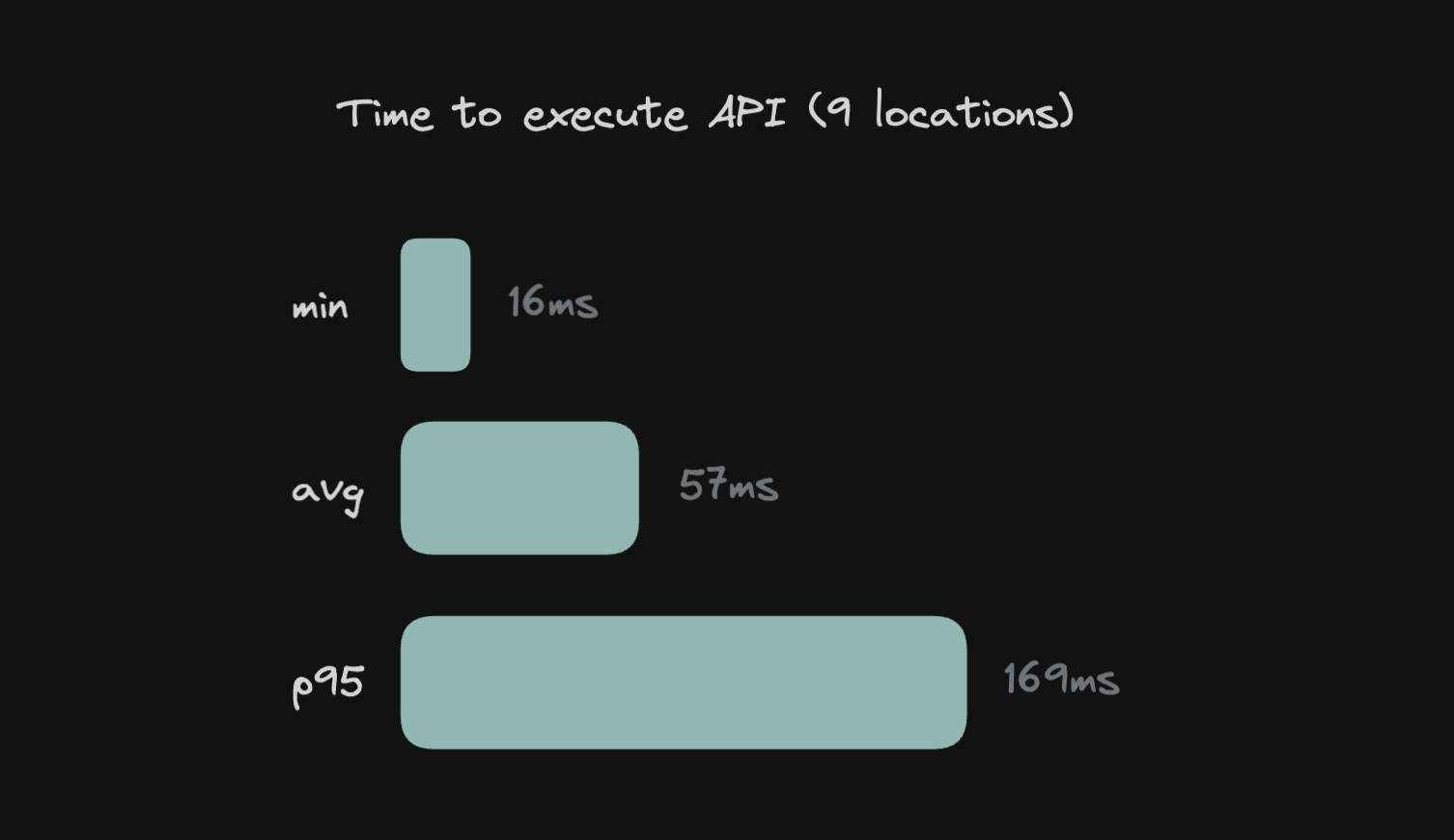

What if we added 3 more locations? Turso allows extra locations to be added as overages to the Scaler plan for $8 each. For a total of $53/month, or $26.5/month for the first three months if you sign up here, timings are as follows:

#Do not give up on your users

Let’s compare the results above with the scenario where the database is in a single location, but all the requests also come from a single location.

We will not show results for both the database and the load generator in the same location because obviously the results are much lower than any other scenario. If you do have your users all in a single place, then for sure, don’t mess with replication and just put all of your infrastructure in that location.

But here’s what you get if the database is in LHR, and the load generator in IAD.

What we see is that the results are much worse than distributing both the code and the data

#Call it whatever you want, but don’t give up on your users!

Maybe you keep calling it "the edge", or maybe you call it something else. One thing doesn’t change: if you have users in many parts of the world, putting both the code and the database close to them will improve their experience significantly. It doesn’t have to be hard, and it doesn’t have to be expensive. And you can sign up until May 15th, to get Turso's Scaler plan for 50% off.