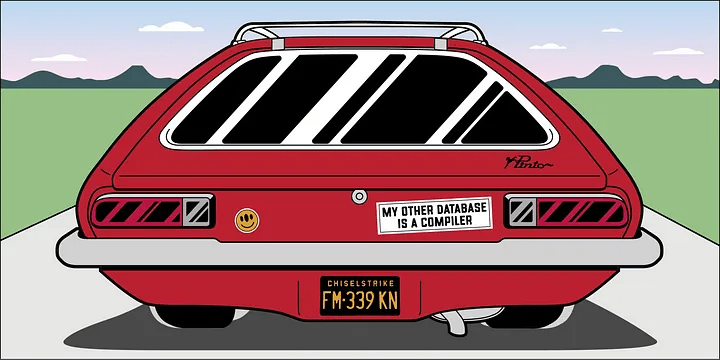

My other database is a compiler

Many years ago, innovation at the data layer meant creating a new database. Is it time for a new compiler instead?

We're Open Source: github.com/chiselstrike/chiselstrike

Many years ago, innovation at the data layer meant creating a new database. To no one's surprise, new databases appeared left and right at rapid pace. Things changed: fast forward to today, and even most of the projects calling themselves databases really are Postgres with a catch.

There is more innovation to be had by offering higher layers that make the experience of interacting with databases less painful. For example, bundling them into serverless offerings so that users won't have to manage them — since managing databases ranks, together with root canals, as top choices for a fun weekend for tech people.

In this article we will present work done by myself and my colleagues Pekka Enberg and Jan Plhák, in which we'll show how we can push the envelope further if we draw inspiration from our past as an industry.

Back in the day when people inserted big floppy drives into physical machines to boot them up, compute innovation meant a new instruction set for a new processor. But after a couple of decades of back and forth between RISC, CISC, Cray, Sun, x86, Itanium, and everything in between, it's very unlikely we'll be seeing a new relevant instruction set anytime soon. Innovation happens at the programming language level in finding new ways to interface with processors in a faster yet safe way, (wink wink Rust), or in how you connect those things together (hello M1!)

Inspired by this example, here's an idea: instead of thinking of new databases, should we write a compiler? And if we do, what kind of cool things can be unleashed?

This may sound far-fetched at first. But in this article we'll show that this is very much possible. We will also show how that can be used to solve a particular problem: the impedance mismatch between early-stage code like prototypes, example code and the like, to production grade code, allowing your code to stay relevant from prototype to production.

Consider the following example problem: “There are many people with similar names out there. Given a particular name, and a list of users, can we find the email of those that are likely to know why the save icon looks like this 💾” ?

class User {

name: string = '';

email: string = '';

age: number = 0;

}

If you are at the prototyping phase of your application, you can start with an in-memory array, and use array properties like filteror find to find a user in that array. Your code would look a little bit like this:

const users: User[] = [

{ name: 'Glauber Costa', email: 'glauber@nospam.me', age: 40 },

{ name: 'Glauber Costa', email: 'glauberjr@nospam.me', age: 15 },

{ name: 'Alvin Wisoky', email: 'alwinjr@wisoky.me', age: 21 },

{ name: 'Alvin Wisoky', email: 'alwin@wisoky.me', age: 41 },

];

const user = users.find(

(user) => user.name == 'Glauber Costa' && user.age >= 40,

);

return new Response(user?.email ?? 'not found');

That's a great prototype: it requires no advanced knowledge of anything other than TypeScript, you don't have to set up any infrastructure, and you have enough elements to test success and failure scenarios. You are ready to go!

But when the rubber hits the road, the data will not be in-memory: it will be stored in a database. Now you have to worry about which database will that be, what characteristics it has, what are the most efficient ways to query it, and how to wrap it all around conveniently in an ORM.

That's a a whole new skillset. Also, you may want the convenience of being able to use the original in-memory code in unit tests, but now your test code is different than production code.

To make your life easier you can, in theory, read all elements into a linear array and keep using your initial code. If you were to do it in ChiselStrike, for example, it would look something like the snippet below, with the findAll call returning all elements into an array. You can filter that array with your original arrow function expression:

const all = await User.findAll();

const user = all.find((user) => user.name == 'Glauber Costa' && user.age >= 40);

return new Response(user?.email ?? 'not found');

That will work well for your first 100 users. But as your dataset grows, your performance tanks. In a growing dataset with up to one million entries, we can query an endpoint with the code above, for the following results:

There are ways to make it better. You can try to avoid materializing all elements and page, which at least has the advantage of not blowing up all your memory. But that's just delaying the inevitable: if you want this to work well, you have to leave the comfort and cozy of TypeScript, and — hopefully through an ORM — dive into database code.

But when we think about it, all the information necessary to construct a query is already encoded in our arrow function expression. So why the hassle?

#We've seen this problem before!

Thinking from first principles, this problem is not very different from what compilers do. There are a large variety of processors, each doing more or less the same thing but with different instruction set architectures and memory models. The programming language establishes guarantees, and the compiler knows how to add memory fences to the right places, when it is safe to reorder instructions, and even the best way to do things.

“The best way to do things” is in itself comprised of two parts. One fact that blew my mind (many) years ago when I first find out, is that given a C/C++ function that returns 0:

int f() {

return 0;

}

Most optimizing compilers will not translate that to the obvious (obvious for those who know asm, that is) x86 machine code below:

f:

push rbp ; push register into the stack

mov rbp, rsp ; adjust the stack pointer

mov eax, 0 ; set the return register to 0

pop rbp ; pop the stack

ret ; return

The code above will save registers that are potentially destroyed by this function to the stack — which is part of the x86 function call convention, then set the return register to 0 with the mov operand (used to load a value into a memory location or register), restore the stack and return.

Instead, optimizing x86 compilers will do something a lot more clever instead:

f:

xor eax, eax ; exclusive-or to return register

ret

First, the compiler notices that this function doesn't really changes the value of any register outside itself, so the stack saving and restoring is unnecessary. But the clever part is to use the exclusive-or operator on the eax register against itself to set it to zero.

The result of applying the exclusive-or operator to every bit with itself is always zero, and this operation is much faster than a load. Using the exclusive-or operator to zero a variable is obvious in hindsight, but it is not something that someone writing machine code by hand would necessarily always think of doing. Not to mention the fact that xor being faster than a mov has to do with the way particular processor works, and not with any obvious mathematical principle. The compiler encodes the years of accumulated industry wisdom, which is now available for anybody.

Another thing compilers do that falls into “best ways of doing things” is perform a series of optimization steps. Compilers can find code that is never truly used and completely eliminate it (similar to the stack example above), transform bounded loops into non-conditional expressions, inline functions, or even not call functions at all when their result is known.

All of that allows programmers to not think about any of these optimizations and write code that favors other things, like readability, over obsessing about how to extract performance from the lower levels of the stack.

Sounds familiar?

#Compilers & databases?

So what prevents us from doing something similar for databases? Let's look at how this would apply to the arrow function in our example, user.age >= 40 && user.name == 'Glauber Costa' .

The first thing a compiler would do is parse your code into an Abstract Syntax Tree. We use swc to tokenize and parse the TypeScript code, and can then translate that into a filter expression in, say, SQL:

We can also encode the wisdom that this query would be faster once an index is created. There is no need to either create indexes in all possible columns (which is expensive), or expect the user to remember to handle them best (which is either slow or error prone). We know which columns would benefit from it and we can mark them as candidates for indexing (optimally, the final decision on whether or not to create the index depends on runtime factors like how frequently this query is expected to happen)

ChiselStrike's built-in compiler does exactly that: The query API accepts TypeScript arrow functions and the built-in compiler transforms the expressions into database expressions. It can also find out from those expressions which indexes should be created, so there is no need to specify them in the model.

// model definition

export class User extends ChiselEntity {

name: string = '';

email: string = '';

age: number = 0;

}

// endpoint code

const user = await User.findOne(

(user) => user.name == 'Glauber Costa' && user.age >= 40,

);

return new Response(user?.email ?? 'not found');

We also don't need to break the mental model of TypeScript into SQL (or even know if this is backed by SQL at all). The result?

Queries are as fast as they would be should we have written “machine code”, without having to worry about it in the process of taking your code from prototype to production.

#But what about runtime?

Say, for example, you want to extend the existing examples to return only valid emails. You would then extend your arrow function to add a validation check, using whatever validator you prefer:

// Validating emails with email-validator.

// Validation happens inline, straight inside the arrow function

const user = await User.findOne(

(user) =>

user.name == 'Glauber Costa' && user.age >= 40 && validate(user.email),

);

return new Response(user?.email ?? 'not found');

// Same code, but now splitting database and runtime code out-of-line.

// The results are equivalent in ChiselStrike.

const user = await User.findOne(

(user) => user.name == 'Glauber Costa' && user.age >= 40,

);

return new Response(validate(user?.email) ? user.email : 'not found');

Going through the AST once more, we can find branches that cannot be transformed into database expressions, and execute them using Deno. The biggest advantage is that they are executed only in the result set returned from the database — which is a lot smaller than the full working set.

If you think this translation is equivalent to executing the static part first, and then calling the validation code in the result, you would be right. The whole point is that you can code freely, and allow the translation layer to do its job as well as possible. The same way a compiler would. And indeed, they yield similar performance results:

#Where to from here?

There are a lot more to do (and if you are interested in doing it with us, we're hiring!)

- Compile imperative code, like aggregations: recent research indicates that it is possible to transform aggregation-like loops into database code, which we plan to do once we improve our internal representation.

- Automatic query projection: The compiler currently only inspects the lambda passed to the filter function. However, if we inspect whole endpoints, we can — via escape analysis — determine what properties of an entity are actually used, and perform automatic projection. That is, the runtime can simply not

SELECTunused columns in the underlying SQL query. - Use query profiling for index selection: whereas right now we always create an index when a candidate is found, optimally runtime information would be taken into account. This is not unlike PGO techniques in standard compilers.

- Push application logic to the database: the ChiselStrike compiler is limited to simple expressions, but we can do more: we can push application logic in some cases down to the database using stored procedures. Similarly, we can avoid runtime evaluation of some predicates by utilizing database functions (for example, querying current time and string conversions).

Useful links: